This is a page about gaps.

How gaps are the biggest problem in building software today. How technology doesn't resolve gaps but human interactions can. On how an assumption that something's obvious can be misplaced. Here we scratch the surface of 2 gap types relevant to our work: Perceptive and Functional

Perceptive gaps

Perceptions can change depending on your viewpoint

Is the front face bottom left or top right?

We could say it depends on how you're looking

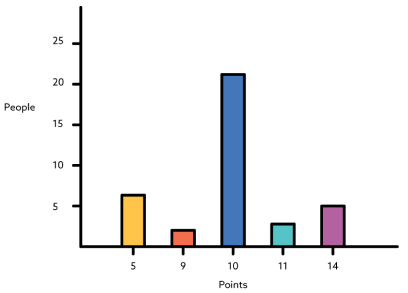

Subjectivity in life is not limited to visual tricks. If we had a requirement "Process X for every point on Y", and we apply to a simple example like a 5 pointed star

This begs the blindingly obvious question: "How many points on a 5 point star?"

Count the points.

Click the star for answer

Who's right and who's wrong here?

Often many answers are completely valid. It depends on perspective. The situation demands a more exacting specification

Everything is vague to a degree you do not realise till you have tried to make it precise

Bertrand Russell

The Philosophy of Logical Atomism

Functional gaps

Let's move from the abstract to a real system requirement

"Pay submitting party £2 when 100 forms are submitted in a day"

Ok this seems clear ..but if we dig in a bit :

- What if only 50 forms get submitted on a particular day? Pay £1 or nothing at all?

- What should happen with just one submitted form?

- What if 99 clicks on a Monday and 1 further click at 00:01 on Tuesday? No pay at all?

- 773 forms submitted during a day? Do we round it down and pay £14, or do we round it up to 800 forms and pay £16? Scale it and pay £15.46?

It only takes a few examples applying a test-mindset to expose a robust sounding requirement as rather weak and requiring more depth

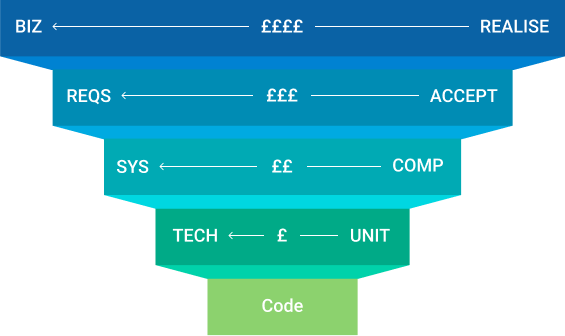

Modified V-Model. It's still a model of verification:

LHS depicts design stages and RHS testing stages.

- Tech requirements verified by Unit tests

- System requirements verified by Component tests

- Functional requirements verified by Aceeptance tests

- Business requirements tested by Realisation

What's actually interesting to this discussion is:

- The relative costs of rectifying defects at each stage

- The top row, where a business case gets verified only by realisation: by the software in the hands of end-users!

Tech problems are cheaper to fix than conceptual problems

Finding problems late costs. Finding you've not built a product your customer wanted is very expensive

'Are we building the right thing?' is more important than 'Are we building the thing right?'

With this firmly in mind, it becomes obvious that sense-checking our specifications as early as possible is a good thing to do....

Specification by Example

What is this?

It's a communication technique. A natural and elegant process for elaborating software business rules. Its biggest benefit is not testing or automation but improved communication and mutual understanding across your implementation team

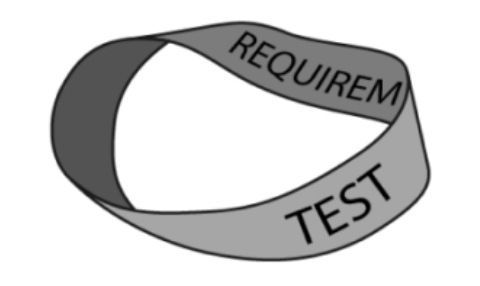

Writing requirements and testing are interrelated, much like the 2 sides of a Mobius Strip

Robert C Martin - Test & Requirement

Classical project requirements focus on what but not on why. These are called Imperative requirements, and they are effectively a proposed solution to a problem without investigating what the problem actually is. They give a false impression of an exacting specification.

Realistic examples make us think harder and leave far less chance for misunderstanding. It becomes easier to spot gaps, missed cases and inconsistencies.

- We get different roles involved in nailing down requirements as examples

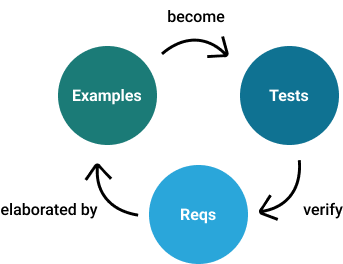

- We exploit the relationships between tests, examples and requirements

- We continue using realistic examples consistently throughout the project

- We discuss examples to prevent information falling through gaps

- Discussion around examples builds up team understanding of the domain

We'll want to automate these examples. For a computer to run them they need to be exact.

This is essentially bringing testing questions and scripting rigour to the requirements process. The specifications become tests.

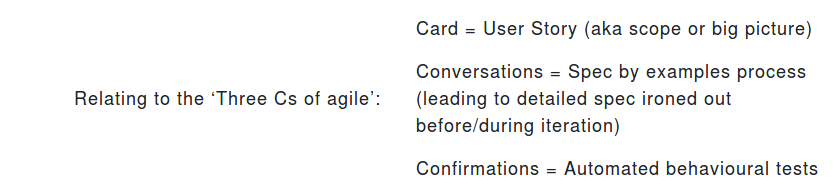

User stories provide a high level plan and facilitate discussion, but they do not specify any details. Agile acceptance tests, on the other hand, provide specification details but do not even try to put things into a wider perspective. Used together, these two techniques really complement each other.

Putting effort into creating an agile specification process promotes project success on many levels